Introduction

Generative AI unlocks many use cases for us to respond to questions and situations dynamically and relatively intelligently. However, there are many scenarios where text based solutions are not suitable from a user experience point of view which has led to a rise in voice-enabled applications. However, what about the next evolution of video use cases?

What if we could create realistic, human-like situations visually? What applications could that unlock?

In this blog, we will build an AI avatar capable of handling sales training and conducting user interviews. This solution leverages a combination of large language models (LLMs), video, and speech models to create an interactive experience. We’ll orchestrate all of this using Cerebrium, a serverless AI infrastructure platform that simplifies the application development and deployment. This post will guide you through the steps to recreate this system, with access to the code and a demo to experiment with.

You can find the demo here and the final code repo here

Cerebrium

First let us create our Cerebrium project. If you don’t have a Cerebrium account, you can create one by signing up here and following the documentation here to get setup.

In your IDE, run the following command to create our Cerebrium starter project: cerebrium init ecommerce-live-stream. This creates two files:

main.py - Our entrypoint file where our code lives

cerebrium.toml - A configuration file that contains all our build and environment settings. This will be used in creating our deployment environment.

Cerebrium is the underlying platform on which our entire application will run. As we go through the tutorial, we will edit the two files above as well as add additional files.

To create the training/interview experience we need to use function calling and additionally use a model that has function calling capabilities. In our tutorial we wanted to try Mistral’s function calling ability. Below we will show you how you can create an OpenAI compatible endpoint on Cerebrium.

Mistral Function Calling

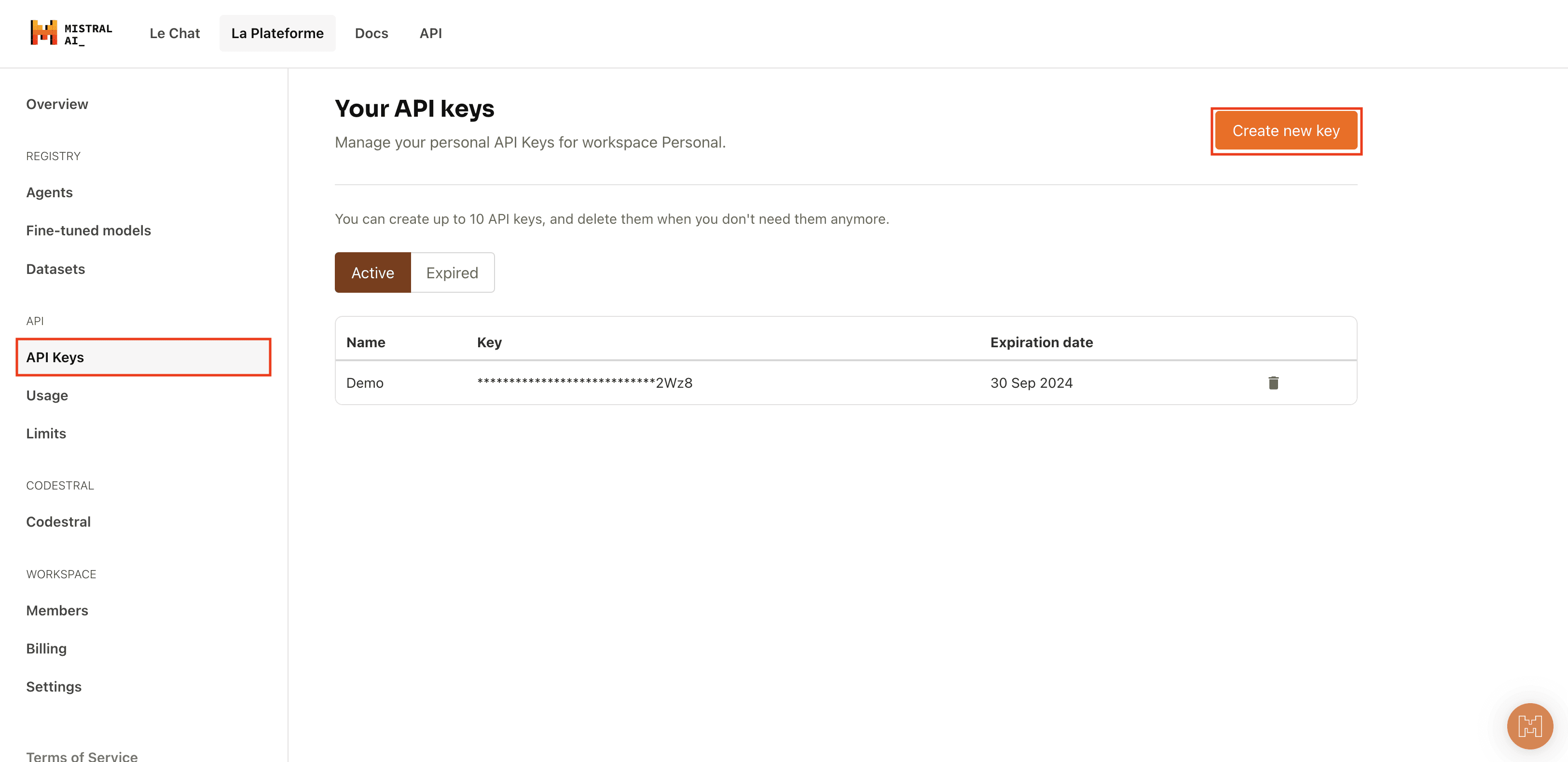

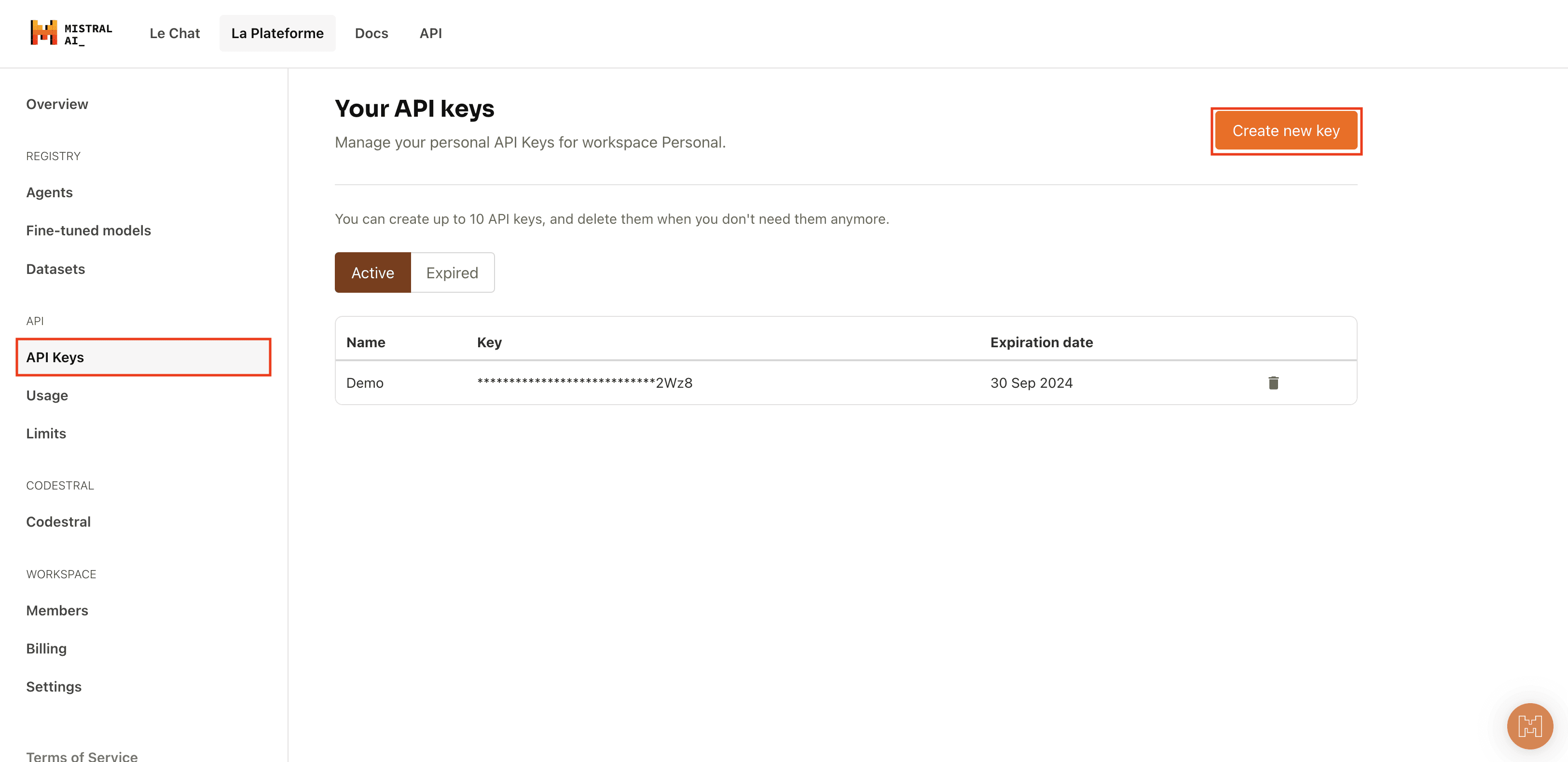

We will be using Mistral’s large model via their API which requires you to sign up for an account here. You can opt to host the Mistral 7B model on Cerebrium since it is also capable of function calling - this leads to a very noticeable decrease in latency. You can check out this repo here about how you could go about implementing it.

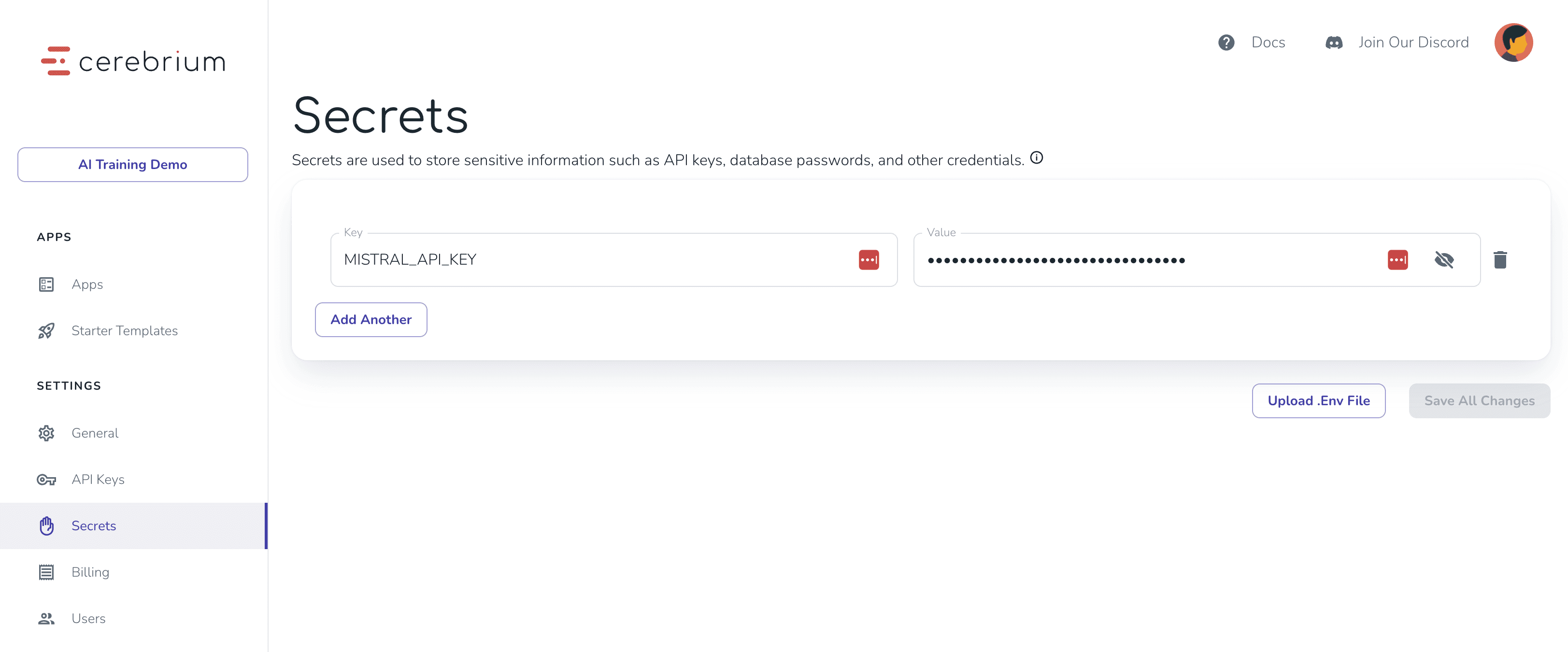

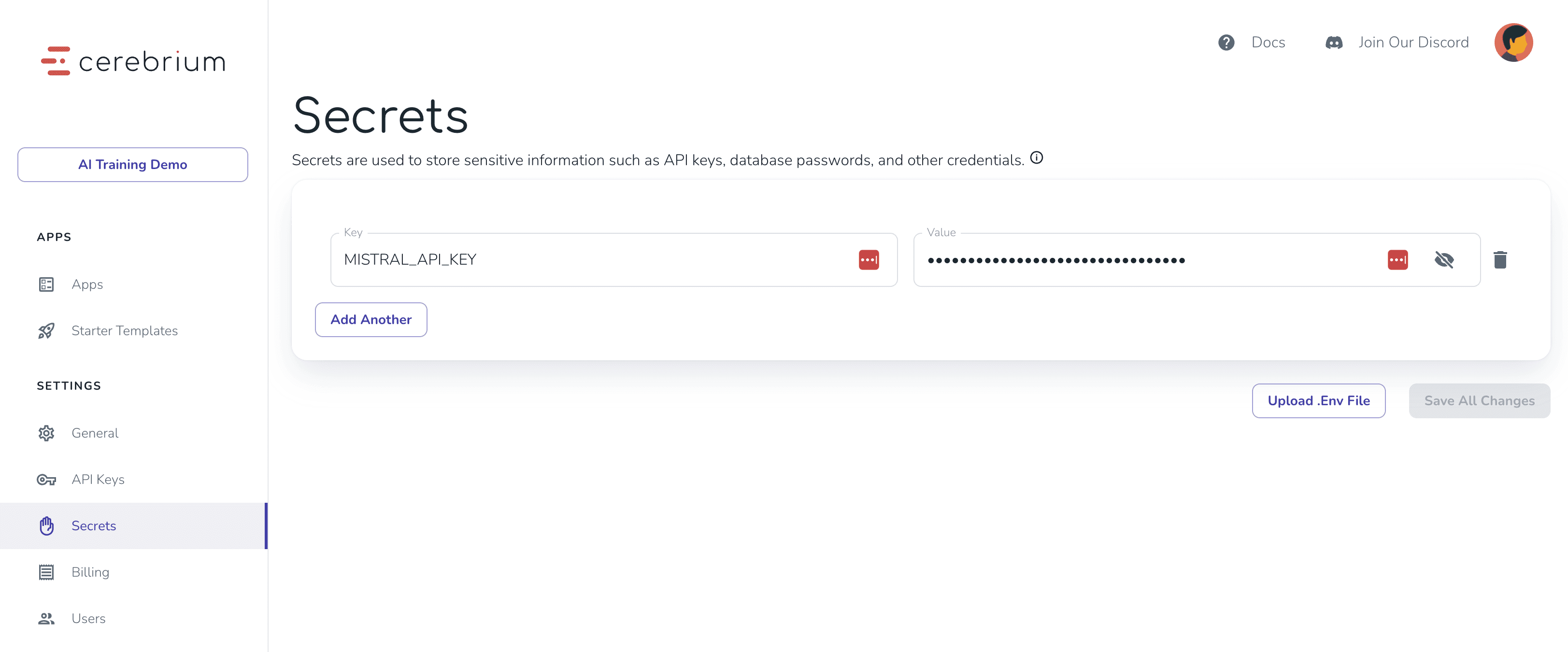

Once you have created an account, create a new API key that expires at a future date. You can then copy your API key to your Secrets in the Cerebrium Dashboard. Secrets are a way for you to access sensitive keys in your code without exposing them publicly. We will show you how to use this later in your code.

To use Mistral on Cerebrium, add the Mistral python package to your pip dependancies in your cerebrium.toml. Cerebrium will automatically download this to create your Python environment when you deploy.

[cerebrium.dependencies.pip]

mistralai = "latest"

requests = "latest"

Next, we need to define the functionality we would like our model to call. In this case we want to make sure the account executive ticks certain boxes in their call such as providing a timeline of when the problem will be solved, setting up a follow up meeting etc. We will use the function calling ability of Mistral to do this.

Below we create the functions for our sales example (the interview functions can be found in the Git repository) and then define them in required format for our Mistral model.

def acknowledge_problem(issue: str):

print("Acknowledging problem", flush=True)

return "Once the user has apologized and acknowledged your problem, ask what they are going to do to solve your problem!"

def issues():

print("Asking about issues", flush=True)

return "Once the user has asked you about what issues you are experiencing, tell them you have had many platform outages over the last week leading to a lost in customers and you want to know what they are going to do to solve your problem!"

def propose_solution(performance_solution: str, cost_solution: str = ""):

print("propose_solution", flush=True)

return "Once the user has suggested possible solutions or next steps, ask when will these solutions be implemented!"

def provide_timeline(performance_timeline: str):

print("provide_timeline", flush=True)

return "Once the user has given a potential timeline of when these solutions will be implemented, ask if you can schedule a follow up to make sure they have met these tasks!"

def schedule_followup(followup_date: str, followup_type:str):

print("schedule_followup", flush=True)

return "Once the user has suggested a follow up, tell them that the proposed date and time suits you."

sales_names_to_functions = {

'acknowledge_problem': functools.partial(acknowledge_problem),

'issues': functools.partial(issues),

'propose_solution': functools.partial(propose_solution),

'provide_timeline': functools.partial(provide_timeline),

'schedule_followup': functools.partial(schedule_followup)

}

In each of these functions, you can call certain functionality such as updating your database or calling a endpoint. In order to lead the conversation in a certain direction, I simply return a message that I add to the system prompt. Based on the context of the messages, the LLM knows how to steer the conversation and is accurate in doing so.

Below is the format that our LLM will expect the tool definitions:

sales_tools = [

{

"type": "function",

"function": {

"name": "acknowledge_problem",

"description": "Use this function to verify that the head of delivery apologizes and acknowledges the problem at hand",

"parameters": {

"type": "object",

"properties": {

"issue": {

"type": "string",

"description": "The issue acknowledged by the head of delivery"

},

},

"required": ["issue"]

}

}

},

{

"type": "function",

"function": {

"name": "issues",

"description": "Use this function when the user asks what problems you have been experiencing",

"parameters": {

"type": "object",

"properties": {

},

}

}

},

{

"type": "function",

"function": {

"name": "propose_solution",

"description": "Use this function when the account executive proposes a solution to address the client's concerns.",

"parameters": {

"type": "object",

"properties": {

"performance_solution": {

"type": "string",

"description": "The proposed solution for the performance issues."

},

},

"required": ["performance_solution"]

}

}

},

{

"type": "function",

"function": {

"name": "provide_timeline",

"description": "Use this function when the account executive provides a timeline for implementing solutions.",

"parameters": {

"type": "object",

"properties": {

"performance_timeline": {

"type": "string",

"description": "The timeline for addressing performance issues."

},

},

"required": ["performance_timeline"]

}

}

},

{

"type": "function",

"function": {

"name": "schedule_followup",

"description": "Use this function when the account executive schedules a follow-up meeting or check-in.",

"parameters": {

"type": "object",

"properties": {

"followup_date": {

"type": "string",

"description": "The proposed date for the follow-up meeting."

},

"followup_type": {

"type": "string",

"description": "The type of follow-up (e.g., call, in-person meeting, email update)."

}

},

"required": ["followup_date", "followup_type"]

}

}

}

]

Now let us create our function that actually calls the Mistral endpoint and handles when we need to call our functions. You will see we are using our Mistral secret to authenticate the client. Mistral will return when we need to call a function and with what arguments (if any), it is then our responsibility to run those functions in our code. We then pass the result of those functions back to the mistral model, so it may return the final result.

import json

from cerebrium import get_secret

api_key = get_secret("MISTRAL_API_KEY")

client = Mistral(api_key=api_key)

async def run(messages: List, model: str, run_id: str, stream: bool = True, tool_choice: str = "auto", tools: List = [], names_to_functions: dict = {}):

model = "mistral-large-latest"

messages = [msg for msg in messages if not (msg["role"] == "assistant" and msg["content"] == "" and (not msg.get("tool_calls") or msg["tool_calls"] == []))]

stream_response = await client.chat.stream_async(

model=model,

messages=messages,

tools=tools,

tool_choice="auto",

)

async for chunk in stream_response:

if chunk.data.choices[0].delta.content:

yield json.dumps(format_openai_response(chunk)) + "\n"

messages.append({"role": "assistant", "content": chunk.data.choices[0].delta.content, "tool_calls": []})

elif chunk.data.choices[0].delta.tool_calls:

tool_obj = {

"role": 'assistant',

"content": chunk.data.choices[0].delta.content,

"tool_calls": [

{

'id': tool_call.id,

'type': 'function',

'function': {

'name': tool_call.function.name,

'arguments': tool_call.function.arguments

}

} for tool_call in chunk.data.choices[0].delta.tool_calls

] if chunk.data.choices[0].delta.tool_calls else []

}

messages.append(tool_obj)

if chunk.data.choices[0].delta.tool_calls:

for tool_call in chunk.data.choices[0].delta.tool_calls:

function_name = tool_call.function.name

function_params = json.loads(tool_call.function.arguments)

function_result = names_to_functions[function_name](**function_params)

messages.append({"role": "tool", "name": function_name, "content": f"", "tool_call_id": tool_call.id})

for msg in messages:

if msg['role'] == 'system':

msg['content'] += f" {function_result}"

break

messages = [msg for msg in messages if not (msg["role"] == "assistant" and msg["content"] == "" and (not msg.get("tool_calls") or msg["tool_calls"] == []))]

new_stream_response = await client.chat.stream_async(

model=model,

messages=messages,

tools=interview_tools,

tool_choice="auto",

)

accumulated_content = ""

async for new_chunk in new_stream_response:

if new_chunk.data.choices[0].delta.content:

accumulated_content += new_chunk.data.choices[0].delta.content

yield json.dumps(format_openai_response(new_chunk)) + "\n"

if new_chunk.data.choices[0].finish_reason is not None:

messages.append({"role": "assistant", "content": accumulated_content, "tool_calls": []})

print(messages, flush=True)

async def run_sales(messages: List, model: str, run_id: str, stream: bool = True, tool_choice: str = "auto", tools: List = []):

async for response in run(messages, model, run_id, stream, tool_choice, sales_tools, sales_names_to_functions):

yield response

You will notice that we format our streamed responses to be OpenAI compatible but also compatible with what Cerebrium expects. We created this helper function:

def format_openai_response(chunk):

result = {

'id': chunk.data.id,

'model': chunk.data.model,

'choices': [

{

'index': choice.index,

'delta': {

'role': choice.delta.role,

'content': choice.delta.content,

"tool_calls": [

{

'id': tool_call.id,

'type': 'function',

'function': {

'name': tool_call.function.name,

'arguments': tool_call.function.arguments

}

} for tool_call in choice.delta.tool_calls

] if choice.delta.tool_calls else []

},

'finish_reason': choice.finish_reason

} for choice in chunk.data.choices

],

'object': chunk.data.object,

'created': chunk.data.created,

'usage': {

'prompt_tokens': chunk.data.usage.prompt_tokens if chunk.data.usage else 0,

'completion_tokens': chunk.data.usage.completion_tokens if chunk.data.usage else 0,

'total_tokens': chunk.data.usage.total_tokens if chunk.data.usage else 0

}

}

return result

At this point you can run

to deploy this endpoint in its current form. You will notice that this endpoint is live at <deployment_url>/run_sales - typically OpenAI compatible endpoints need to follow the format of ending in /v1/chat/completions. All Cerebrium endpoints are OpenAI compatible meaning you can set your base url to: <deployment_url>/run_sales and it will also route all <deployment_url>/run_sales/v1/chat/completions requests to this function

Cartesia

For our demo, we wanted to be able to put the end user in many different scenarios.

Angry frustrated customers

Polite leads who are our ideal ICP

etc

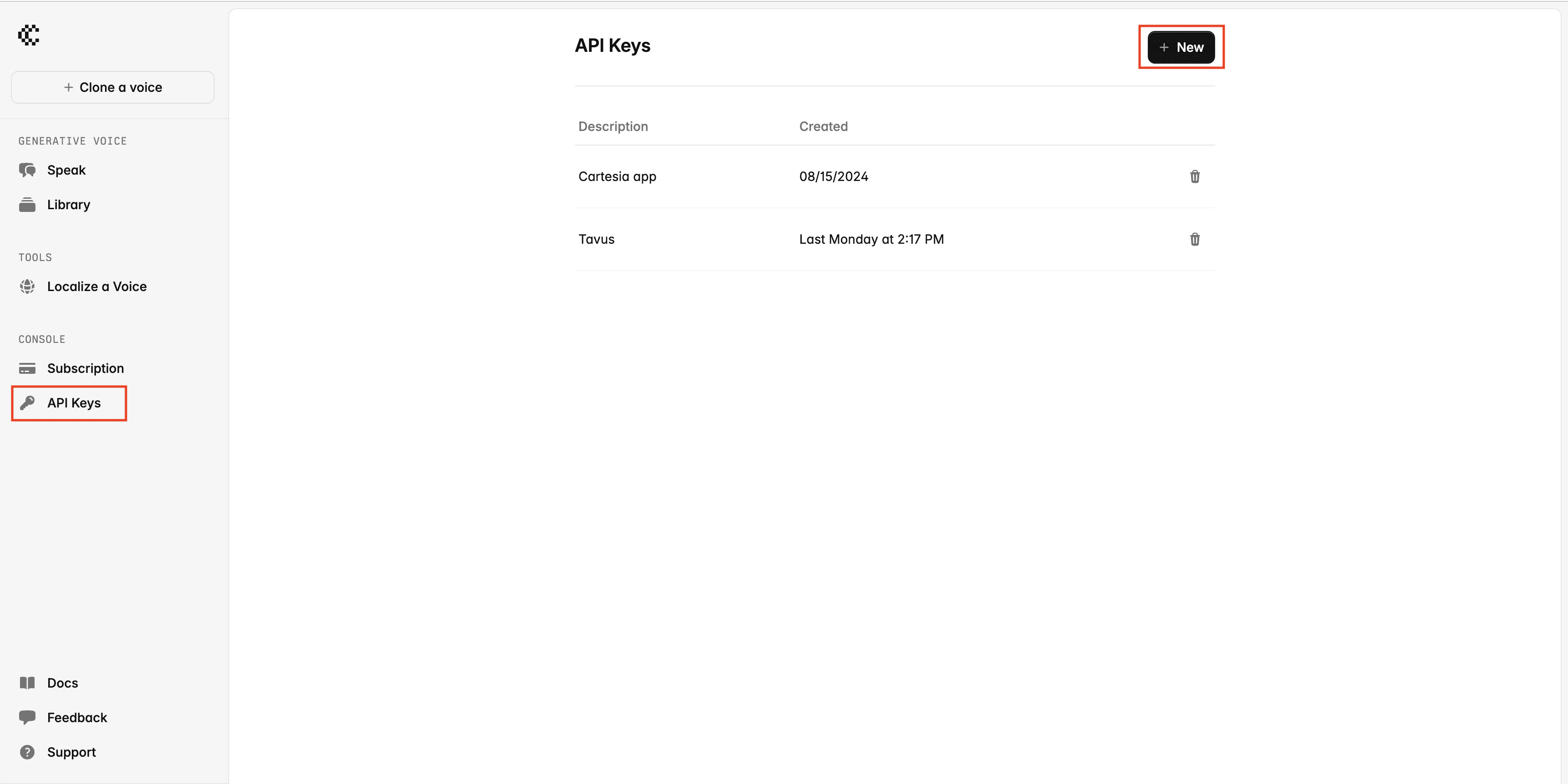

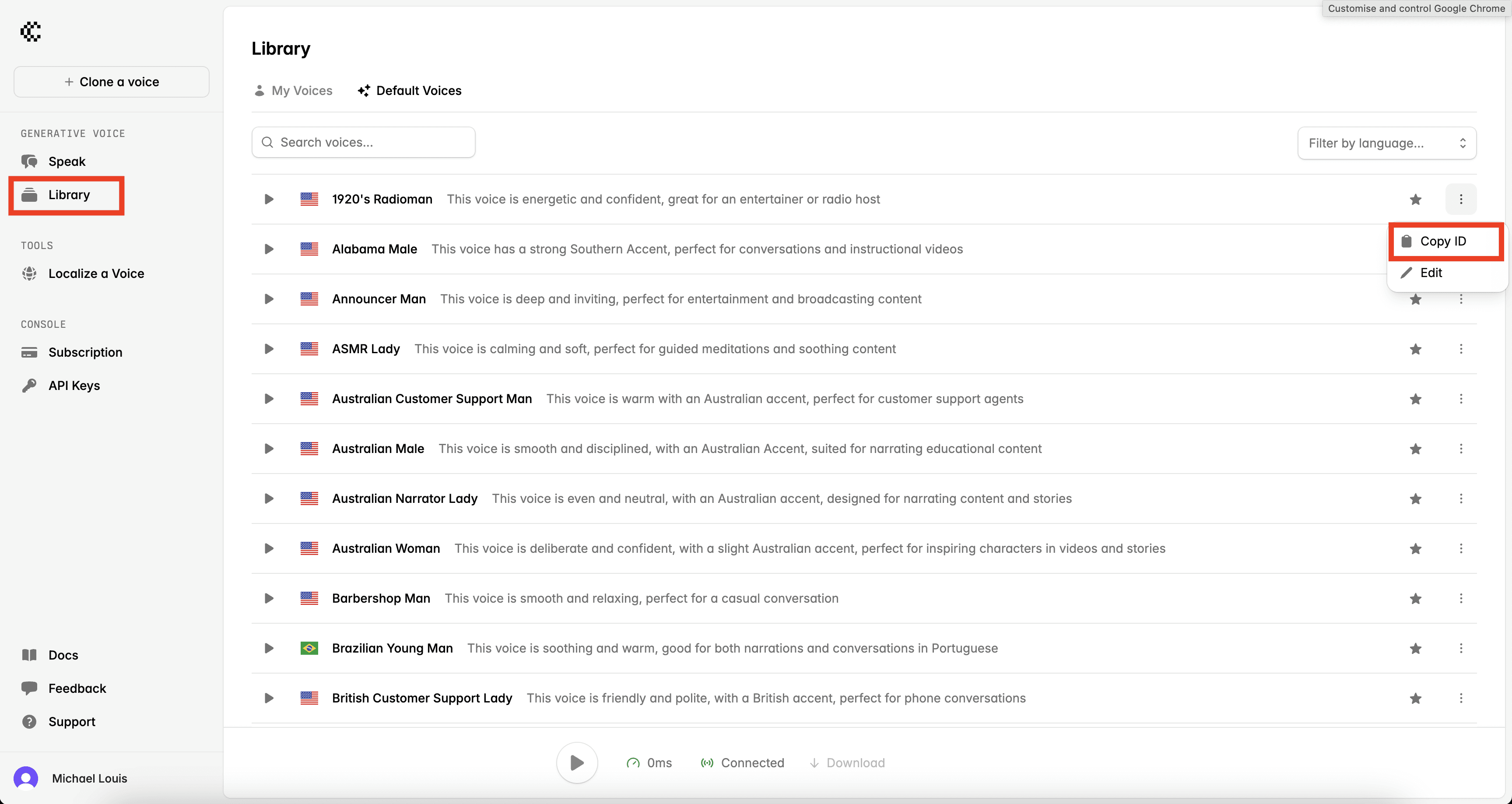

In order to do this we will be using Cartesia. Cartesia offers a low-latency, hyper-realsitic voice API that comes with emotional controls meaning we can offer voices that are angry in tone, speak very fast etc. You can signup for an account here.

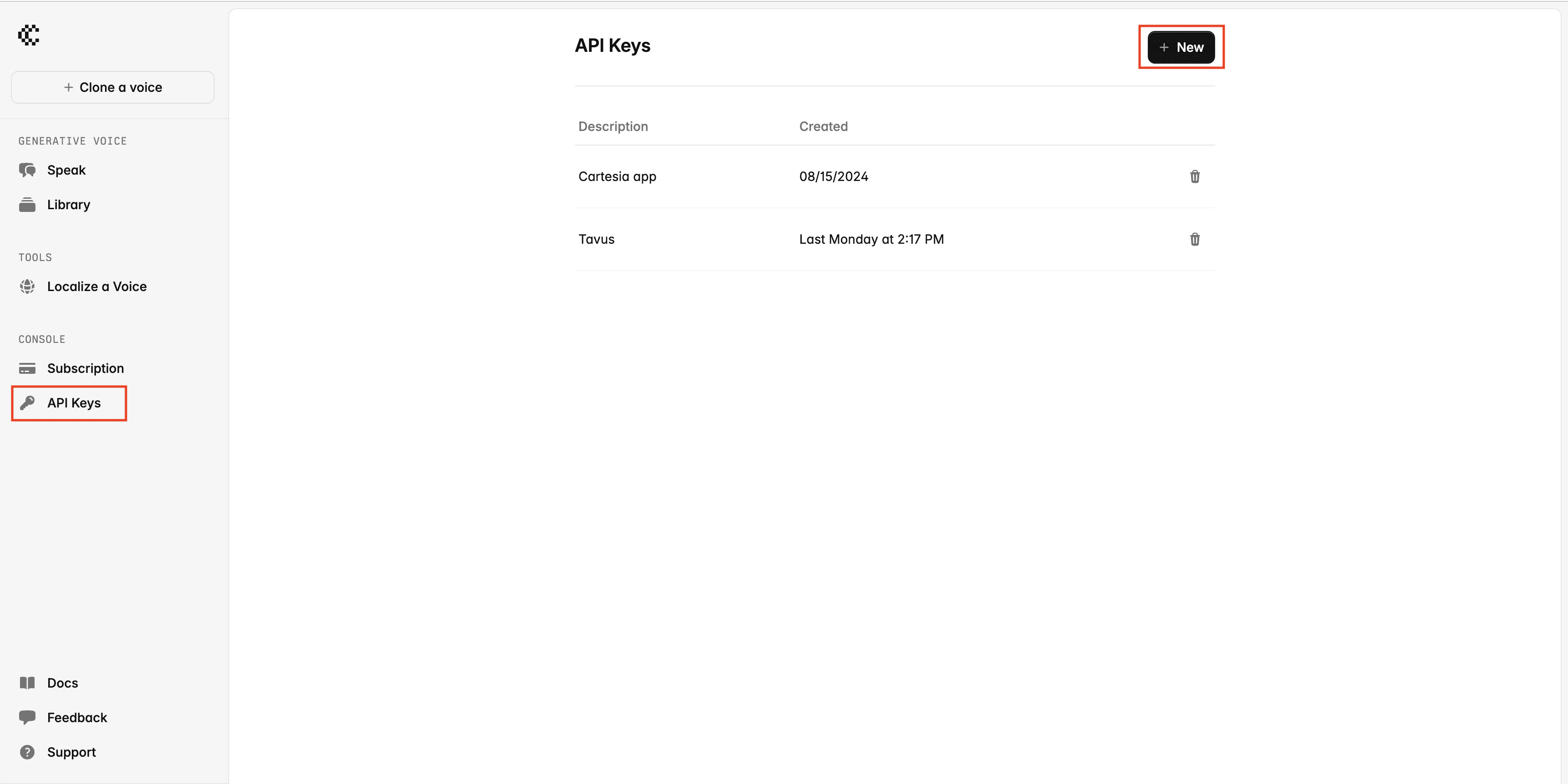

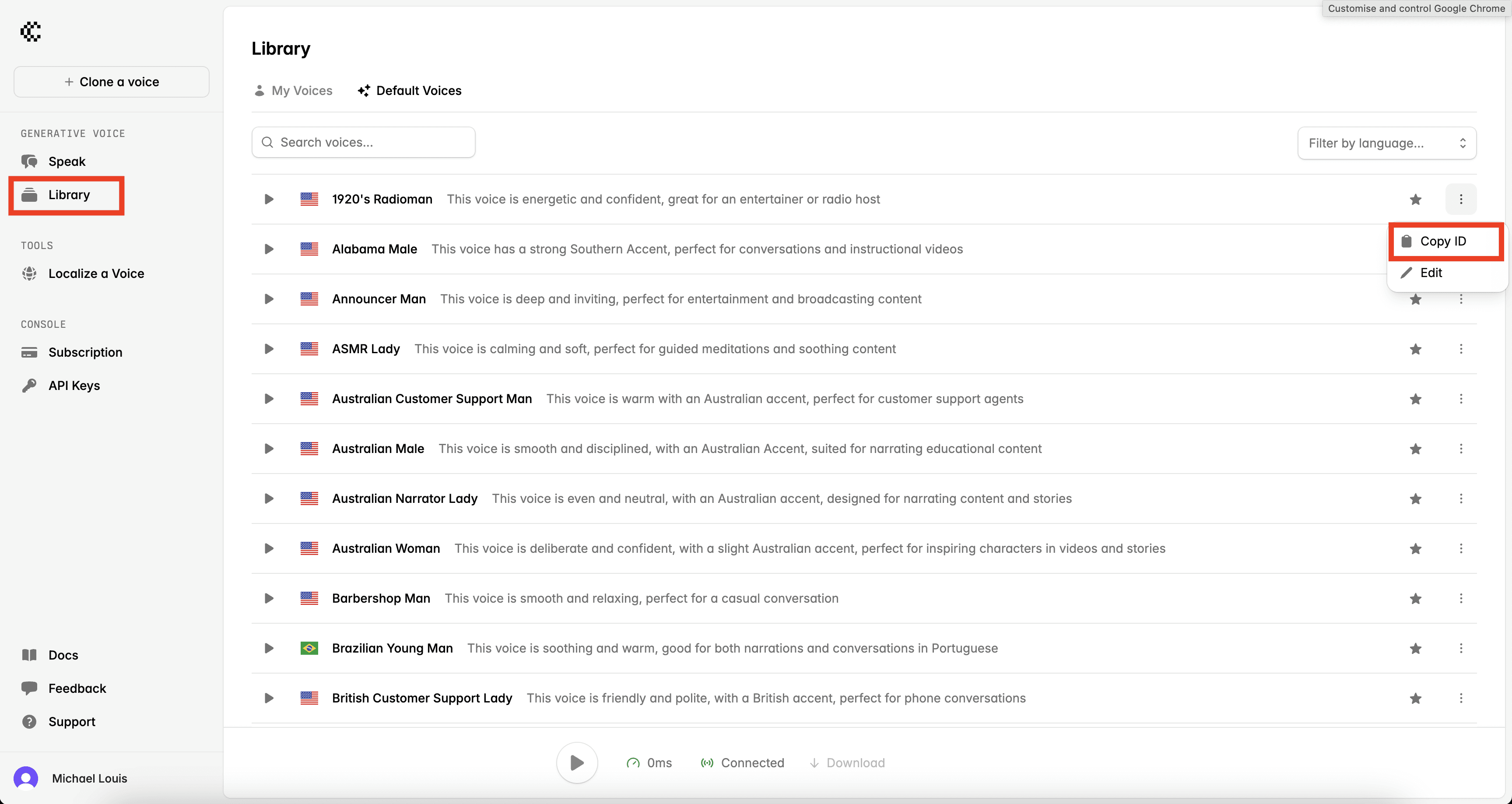

Once you have signed up, create a API key and add it to your Cerebrium Secrets - we will be using this in our setup later. I would then recommend playing with different voices and adjusting the emotional controls to get an idea of the experience.

Once you have finished finding a voice that you like, get the voice ID - we will need this in the next section

Tavus

Tavus allows you to build AI-generated video experiences in your application using a API. We will be using them to create our AI avatar so as to create the most realistic situation for our sales training.

Tavus is extremely modular! You can use their pre-built avatars or train your own avatar based on video recordings you have. It also allows you to use their version of GPT-4 or you can use any OpenAI compatible endpoint. Lastly, you can also use and TTS provider in which case we will be using Cartesia above.

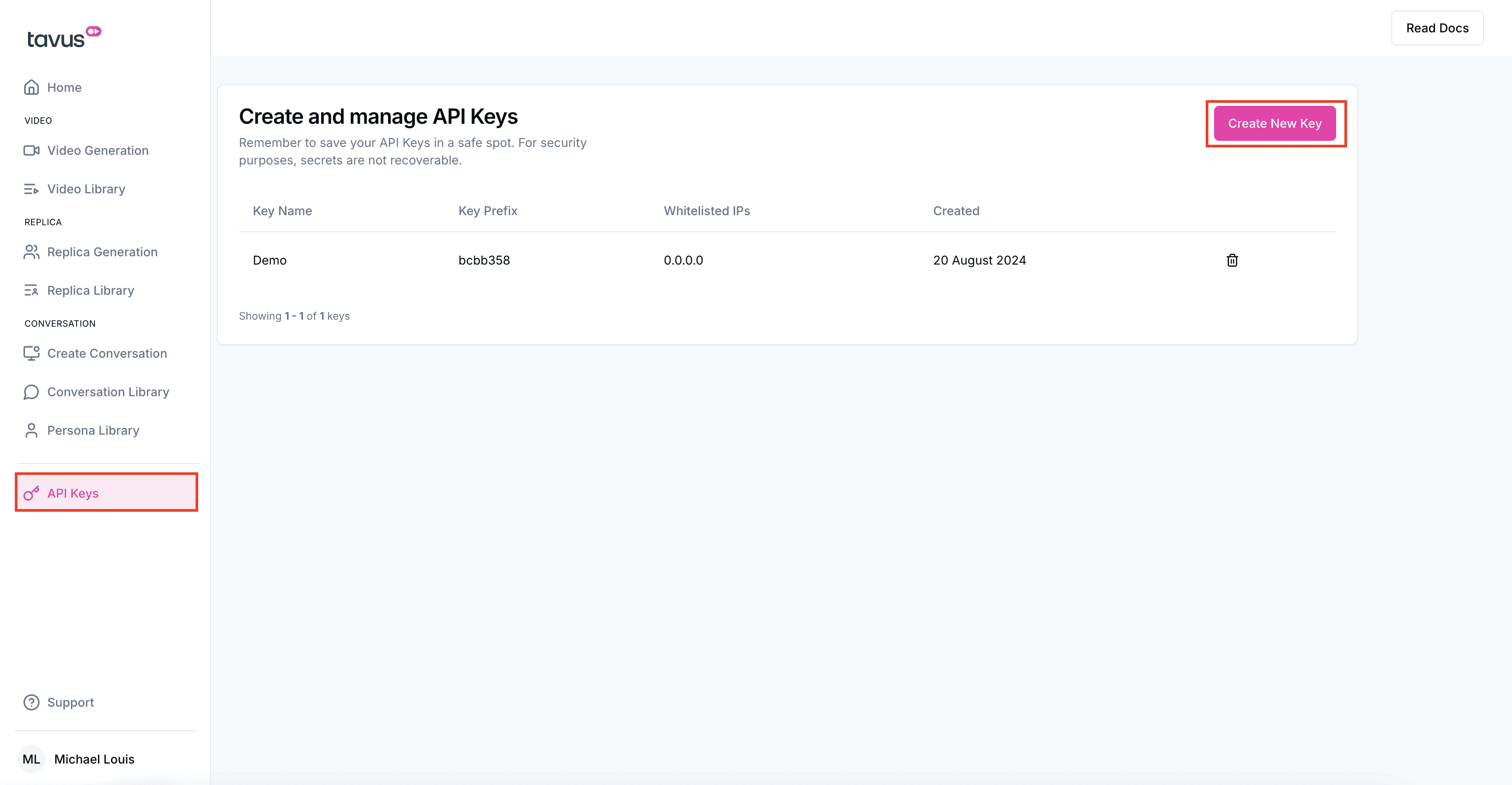

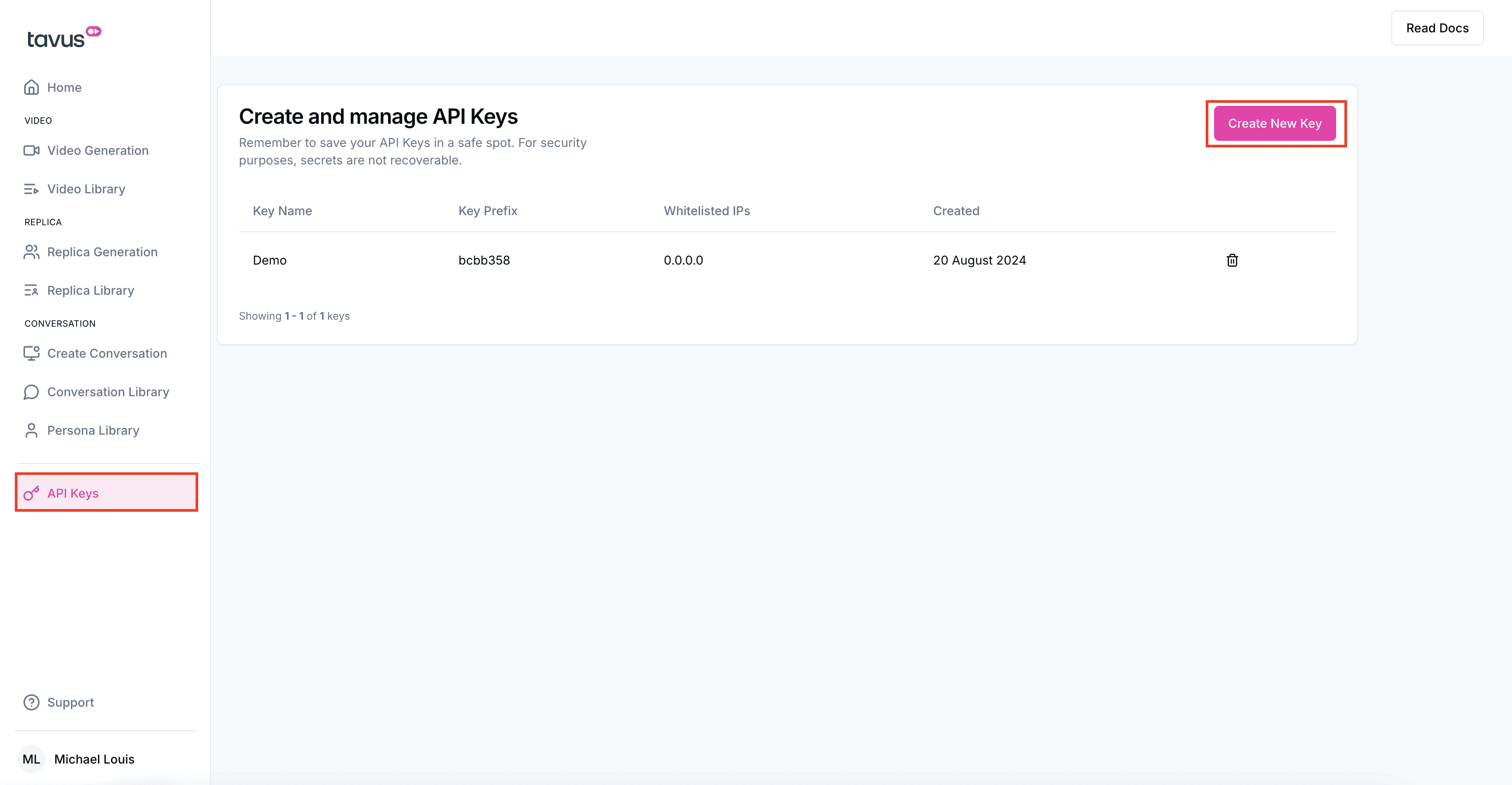

You can sign up to Tavus here. We need to generate our API Key and add it to our Cerebrium Secrets.

In order to create a AI Avatar with custom settings (LLM and voice) we need to create a persona with these settings. You can define the following function to create personas:

def create_persona(type: str = "sales"):

import requests

url = "https://tavusapi.com/v2/personas"

payload = {

"persona_name": "Sales Coach" if type == "sales" else "Interview Coach",

"system_prompt": "You are the lead engineer at an AI company called Pillowsoft, and are frustrated with your infrastructure provider, AI Infra. They have ongoing platform outages that are causing issues on your platform. Your job is to find out when AI Infra will remedy these solutions. Keep your responses relatively short. Ask for clarification if a user response is ambiguous." if type == "sales" else "You are the lead recruiter at the AI company Pillowsoft and are recruiting for a multitude of roles. Be very polite, professional and conversational.",

"context": "You are on a call with an account executive from AI Infra, the provider of your platform's machine learning infrastructure. Their repeated service disruptions are causing downtime for your platform, leading to unhappy customers and affecting your business. You are seeking a solution and demanding accountability from AI Infra for when they will solve these issues." if type == "sales" else "You are on a call with a potential candidate who applied for a job at your company. Be very polite and upbeat. This is your first call with them so you are just trying to gather some initial data about them.",

"layers": {

"llm": {

"model": "mistral-large-latest",

"base_url": "https://api.cortex.cerebrium.ai/v4/p-d08ee35f/coaching-training/run_sales" if type == "sales" else "https://api.cortex.cerebrium.ai/v4/p-d08ee35f/coaching-training/run_interview",

"api_key": get_secret("CEREBRIUM_JWT"),

"tools": sales_tools if type == "sales" else interview_tools

},

"tts": {

"api_key": get_secret("CARTESIA_API_KEY"),

"tts_engine": "cartesia",

"external_voice_id": "820a3788-2b37-4d21-847a-b65d8a68c99a",

"voice_settings": {

"speed": "fast" if type == "sales" else "normal",

"emotion": ["anger:highest"] if type == "sales" else ["positivity:high"]

},

},

"vqa": {"enable_vision": "false"}

}

}

headers = {

"x-api-key": get_secret("TAVUS_API_KEY"),

"Content-Type": "application/json"

}

response = requests.request("POST", url, json=payload, headers=headers)

print(response)

return response

Now that we have created our personas, we need to copy the Persona ID's below when creating a conversation. In our main.py add the following

def create_tavus_conversation(type: str):

if type not in ["sales", "interview"]:

raise ValueError("Type must be either 'sales' or 'interview'")

url = "https://tavusapi.com/v2/conversations"

payload = {

"replica_id": "r79e1c033f",

"persona_id": "pb6df328" if type == "sales" else "paea55e8",

"callback_url": "https://webhook.site/c7957102-15a7-49e5-a116-26a9919c5c8e",

"conversation_name": "Sales Training with Candidate" if type == "sales" else "Interview with Candidate",

"custom_greeting": "Hi! Lets jump straight into it! We have been having a large number of issues with your platform and I want to have this call to try and solve it" if type == "sales" else "Hi! Nice to meet you! Please can you start with your name and telling me a bit about yourself.",

"properties": {

"max_call_duration": 300,

"participant_left_timeout": 10,

"enable_recording": False,

}

}

headers = {

"x-api-key": get_secret("TAVUS_API_KEY"),

"Content-Type": "application/json"

}

response = requests.request("POST", url, json=payload, headers=headers)

print(response.json())

return response.json()

You will notice that we set a custom_greeting message - this is the message the Avatar will greet you with when joining the call. The callback_url is what Tavus uses to update you on the state of the call (started, ended etc) - we didn't really need this functionality that we just got a fake webhook from webhook.site.

Deploy

To deploy this application to Cerebrium you can simply run the command: cerebrium deploy in your terminal.

If it deployed successfully, you should see something like this:

Frontend

Once you have deployed your Cerebrium application we simply need to connect it to our frontend. To get started, you can clone the following Github repository:

In the .env file, populate your Cerebrium deployment in the format:

Then you can run:

You should now be able to start a simulate training or interview process that is custom to your configuration:)

Conclusion

This AI avatar unlocks a wide range of use cases for businesses looking to streamline training and onboarding processes. It enables consistent and scalable sales training, allowing staff to practice real-time conversations with AI-driven role-play. For interview applications, the avatar can simulate various interview scenarios, helping candidates prepare more effectively. These use cases reduce time and costs, while maintaining a high standard of training and engagement across teams.