Tutorial

May 19, 2024

Creating an Executive Assistant using LangChain, LangSmith, Cerebrium and Cal.com

Michael Louis

Founder

Introduction

As a startup founder, I have a very specific way that I like to split my time since I usually need to be everywhere all at once. What has worked best for me is:

Mornings are for my team only, in order to sync on current priorities as well as make sure no one is blocked. It’s also the time when I like to do my most demanding work.

Afternoons are for customers or potential partnership meetings since I am usually not at my mental peak (I am always at my peak).

Nights are for me to catch up on planning, as well as meeting with any friends or other entrepreneurs who ask me for help, advice etc.

I have never thought about getting an executive assistant since I don’t think its worth the cost at this stage but it would be nice to not organise my calendar everyday. Also its a flex when someone says "Claire will coordinate a time for us to connect" because it suggests that their time is valuable.

I have been meaning to try out LangSmith, the monitoring tool from LangChain and I thought an agent to manage my calendar would be a fun project. I also feel like with all the advancements in text-to-speech (TTS) models, I could make this pretty professional given enough time. I’m hoping the open-source world can take this example and run with it!

In this tutorial, I am going to create an executive assistant, Cal-vin, to manage my calendar (Cal.com) with employees, customers, partners and friends. I will use the LangChain SDK to create my agent, the LangSmith platform to monitor how it is scheduling my time throughout the day and monitor situations in which it fails to do a correct job. Lastly, we will deploy this application on Cerebrium to show how it handles deploying and scaling our application seamlessly.

You can find the final version of the code here.

Concepts

To create an application like this, we will need to interact with my calendar based on instructions from a user. This is a perfect use case for an agent with function (tool) calling ability. LangChain is a framework with a lot of functionality supporting agents, they are also the creators of LangSmith and so an integration should be relatively easy.

When we refer to a tool, we are referring to any framework, utility, or system that has defined functionality around a use case. For example, we might have a tool to search Google, a tool to pull our credit card transactions etc.

LangChain also has three concepts/functions that we need to understand:

ChatModel.bind_tools():This is a method for attaching tool definitions to model calls. Each model provider has a different way they expect tools to be defined however; LangChain has created a standard interface so you can switch between providers and it is versatile. You can pass in a tool definition (a dict), as well as other objects from which a tool definition can be derived: namely Pydantic classes, LangChain tools, and arbitrary functions etc. The tool definition tells the LLM what this tool does and how to interact with it.AIMessage.tool_calls: This is an attribute on theAIMessagetype returned from the model for easily accessing the tool calls the model decided to make. It will specify any tool invocations in the format specified from the bind_tools call:

AIMessage.tool_calls: This is an attribute on theAIMessagetype returned from the model for easily accessing the tool calls the model decided to make. It will specify any tool invocations in the format specified from the bind_tools call:

create_tool_calling_agent(): The tool_calling_agent is just a standard way to bring the above concepts all together to work across providers that have different formats so you can easily switch out models.

Setup Cal.com

I am a big fan of Cal.com and believe the team is going to keep shipping incredible features and so wanted to build a demo using them. If you do not have an account you can create one here. Cal will be our source of truth, so if you update time zones, or working hours in Cal, our assistant will reflect that.

Once your account is created, click on “API keys” in the left sidebar and create an API key with no expiration date.

To test that it’s working, you can do a simple CURL request. Just replace the following variables below:

Username

API key

Update the dateFrom and to dateTo variables

You should get a response similar to the following:

Great! Now we know that our API key is working and pulling information from our calendar. The API calls we will be using later in this tutorial are:

/availability: Get your availability

/bookings: Book a slot

Cerebrium setup

If you don’t have a Cerebrium account, you can create one by signing up here and following the documentation here to get setup

In your IDE, run the following command to create our Cerebrium starter project: cerebrium init 6-agent-tool-calling. This creates two files:

main.py- Our entrypoint file where our code livescerebrium.toml- A configuration file that contains all our build and environment settings

Add the following pip packages near the bottom of your cerebrium.toml. This will be used in creating our deployment environment.

We will be using OpenAI GPT3.5 for our use cases and so we need an API key from them. If you don’t have an account, you can sign up here. You can then create an API key here. The API key should be in the format: “sk_xxxxx”.

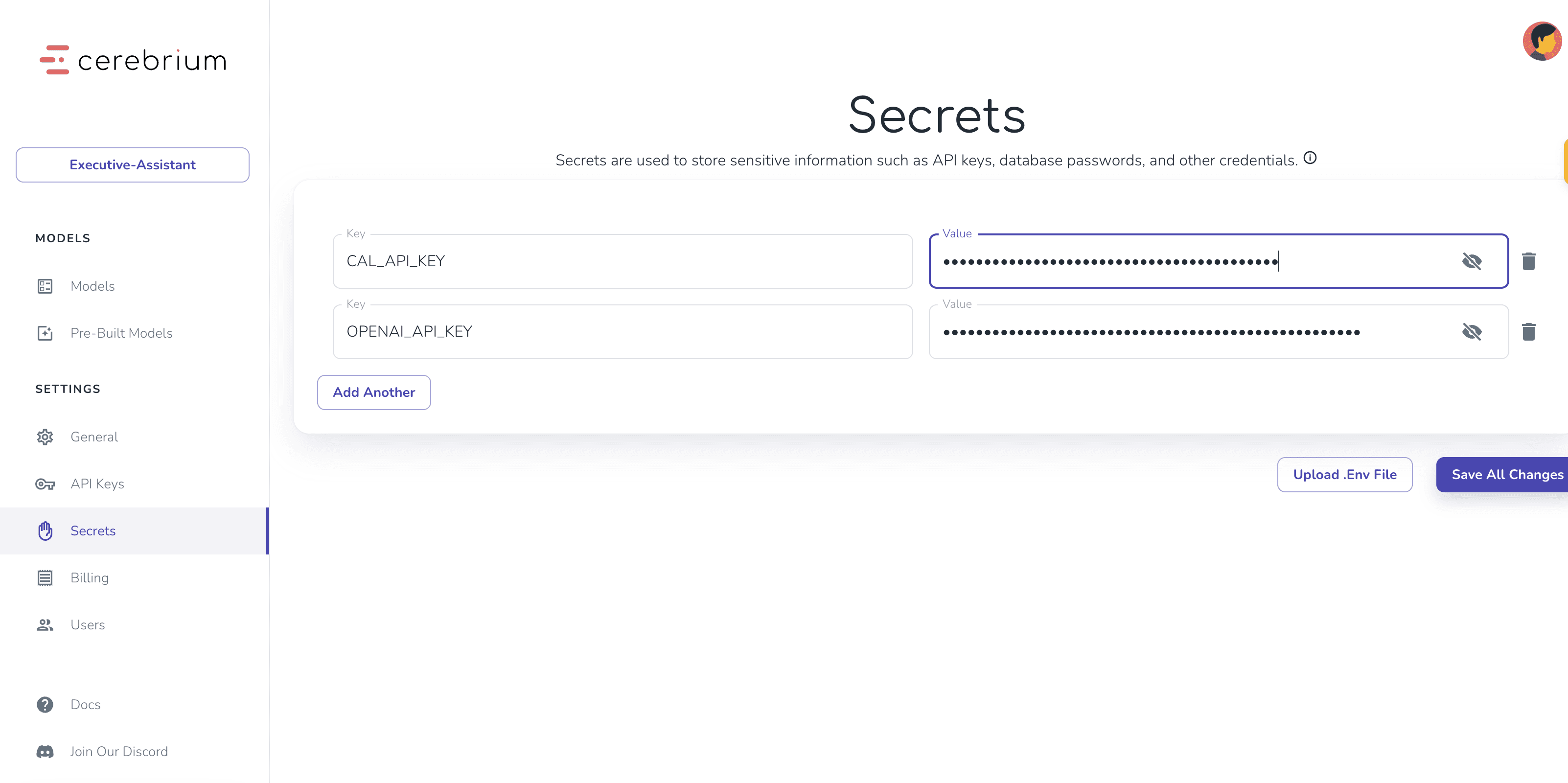

In your Cerebrium dashboard you can then add your Cal.com and OpenAI API keys as secrets by navigating to “Secrets” in the sidebar. For the sake of this tutorial I called mine “CAL_API_KEY” and “OPENAI_API_KEY”. We can now access these values in our code at runtime without exposing them in our code.

Agent Setup

To start we need to write two tool functions that the agent will use to check availability on our calendar as well as book a slot.

Get availability tool

You would have seen from the test API request we did above to Cal.com that the API returns your availability in the following way:

The time slots that you are already busy

Your working hours on each day

Below is the code to achieve this

In the above snippet we are doing a few things:

We give our function the @tool decorator so that LangChain can tell the LLM this is a tool.

We write a docstring that explains to the LLM what this function does and what input it expects.The LLM will make sure it asks the user enough questions in order to collect this input data.

We wrote a helper function, find_available_slots, in order to take the information returned from the Cal.com API and format it so its more readable. It will show the user the time slots available on each day.

We then follow a similar practice to write our book_slot tool. This will book a slot in my calendar based on the selected time/day.

Now that we have created our two tools let us create our agent:

The above snippet is used to create our agent executor which consists of:

Our prompt template:

This is where we can give instructions to our agent on what role it is taking on, its goal and how it should perform in certain situations etc. The more precise and concise this is, the better.

Chat History is where we will inject all previous messages so that the agent has context on what was said previously.

Input is new input from the end user.

We then instantiate our GPT3.5 model that will be the LLM we will be using. You can swap this our with Antrophic or any other provider just by replacing this one line - LangChain makes this seamless.

Lastly, we join this all together with our tools to create an agent executor.

Setup Chatbot

The above code is static in that it will only reply to our first question but we might need to have a conversation in order to find a time that suits both the user and my schedule. We therefore need to create a chatbot with tool calling capabilities and the ability to remember past messages. LangChain supports this with RunnableWithMessageHistory().

It essentially allows us to store the previous replies of our conversation in a chat_history variable (mentioned above in our prompt template) and tie this all to a session identifier so your API can remember information pertaining to a specific user/session. Below is our code in order to implement this:

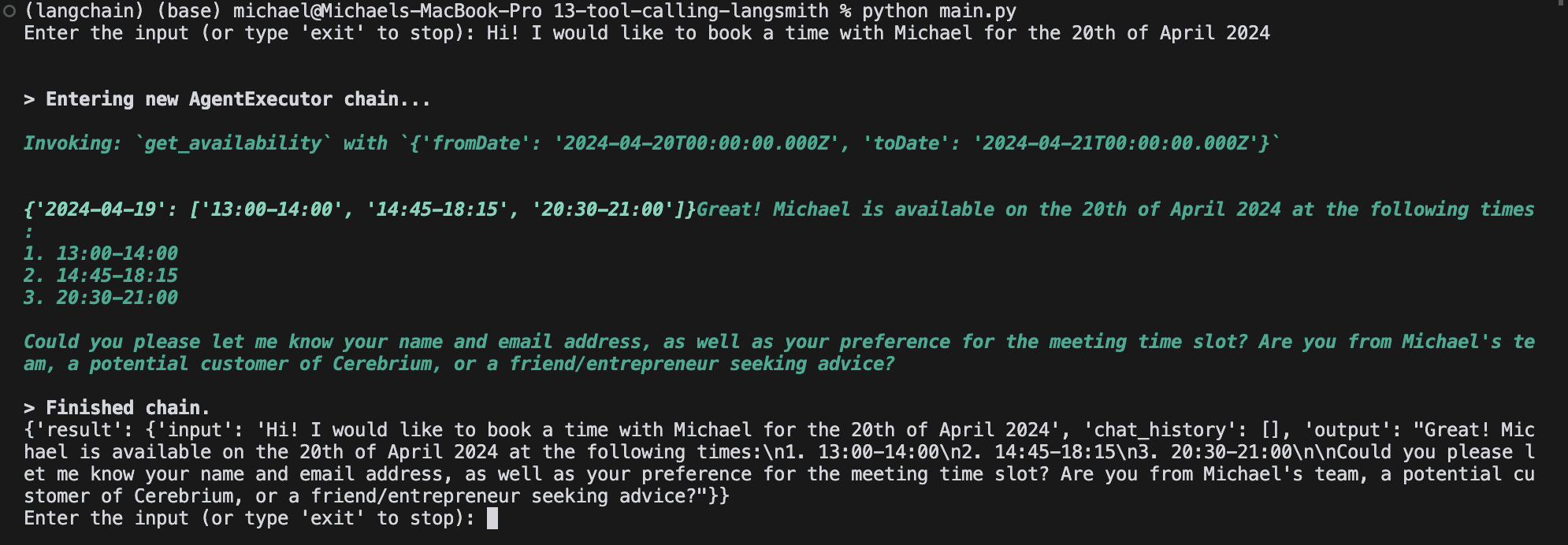

Let us run a simple local test to make sure everything is working as expected.

The above code does the following:

We define a Pydantic object which specifies the parameters our API expects - the user prompt and a session id to tie the conversation to.

The predict function in Cerebrium is the entry point for our API so we just pass the prompt and session id to our agent and print the results.

To run this, simply run the following in your terminal: python main.py. You will need to replace your secrets with the actual values when running locally. You should then see output similar to the following:

If you keep talking and answering, you will see it will eventually book a slot.

Integrate Langsmith

When releasing an application to production, its vital to know how it is performing, how users are interacting with it, where is it going wrong etc. This is especially true for agent applications since they have indeterministic workflows based on how a user interacts with the application and so we want to make sure we handle any and all edge cases. LangSmith is a logging, debugging and monitoring tool from LangChain that we will use. Your can read more about LangSmith here.

Lets setup LangSmith in order to monitor and debug our application. First, add LangSmith as a pip dependancy to our cerebrium.toml file.

Next, we need to create an account on LangSmith and generate and API key - its free 🙂. You can sign up for an account here and can generate an API key by clicking the settings (gear icon) bottom left.

Next we need to set the following environment variables. You can add the following code at the top of your main.py. You can add the API key to your secrets in Cerebrium

In order to integrate tracing into your applications it is as easy as adding the @traceable decorator to your function(s). LangSmith automatically traverses our functions and subsequent calls so we need to only put it above the predict function and we will see all the tool invocations and OpenAI responses automatically. If there is a function, that predict doesn’t call for example, but you instantiate another way, then make sure to decorate it with traceable. Edit main.py to have the following:

Easy! Now LangSmith is set up. Run python main.py to run your file and test booking an appointment with yourself.

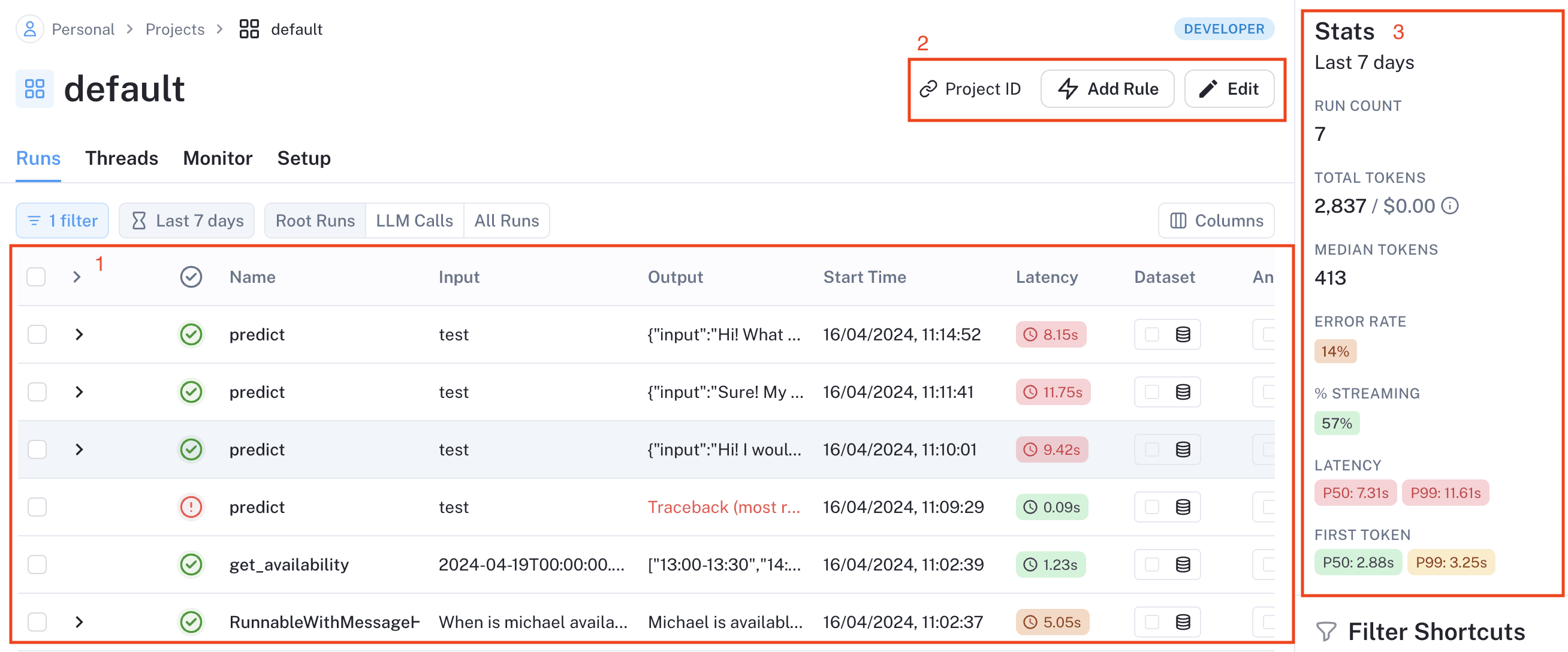

After you have completed a successful test run you should see data populating in LangSmith. You should see the following:

In the Runs tab, you can see all your runs (ie: invocations/API requests).

In 1 above, it takes the name of our function, input is set to the Cerebrium RunID which in this case we set to “test”. Lastly, you can see the input as well as the total latency of your run.

LangSmith wants you to create various automations based on your data. These can be:

Sending data to annotation queues that your team needs to label for positive and negative use cases

Sending to datasets that you can eventually train a model on

Online evaluation is a new feature that allows you to use a LLM to evaluate data for rudeness, topic etc.

Triggering webhook endpoints

and much more…

You can set these automations by clicking the “Add rule” button above (2) and specifying under what conditions you would like the above to occur. The options to create a rule on are a filter, a sampling rate, and an action.

Lastly, in 3 you can see overall metrics about your project such as number of runs, error rate, latency etc.

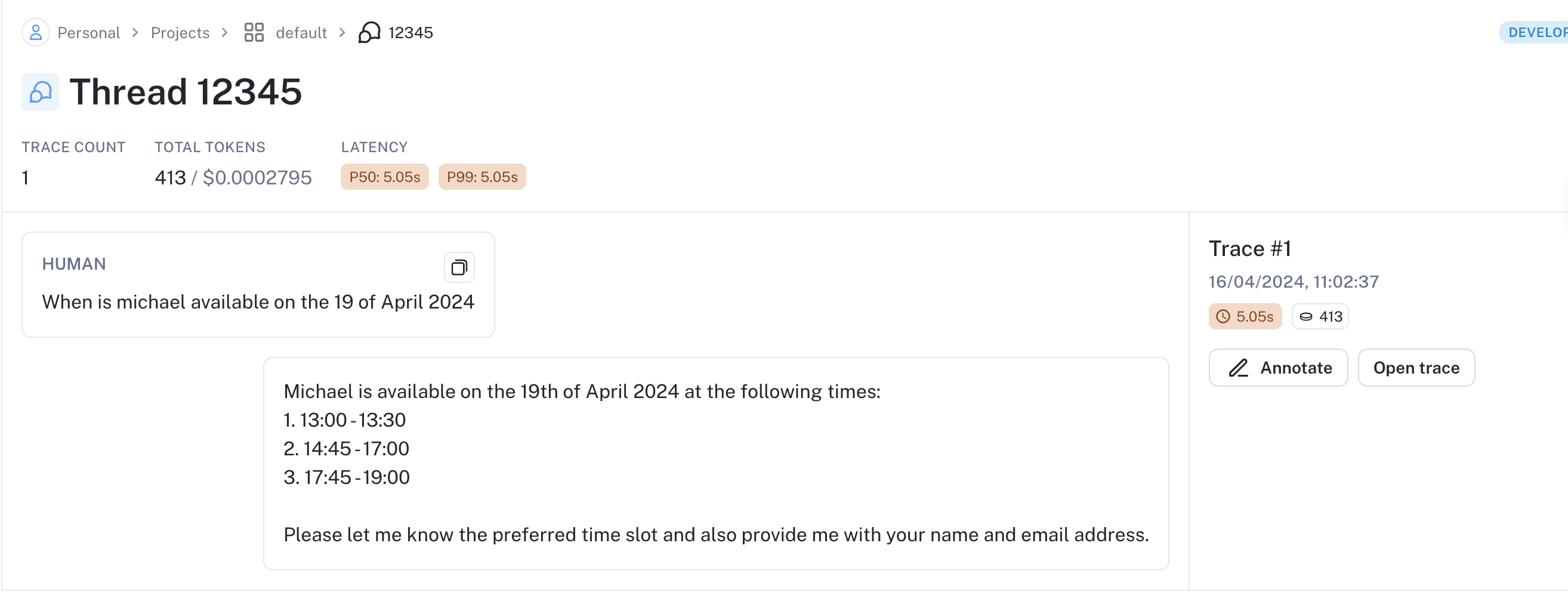

Since our interface is conversational, there are many use cases where you would like to follow the conversation between your agent and a user without all the bloat. Threads in LangSmith does exactly this. I can see how a conversation evolved over time and if something seems out of the ordinary, I can open the trace to dive deeper. Note that threads are associated with the session id we gave to it.

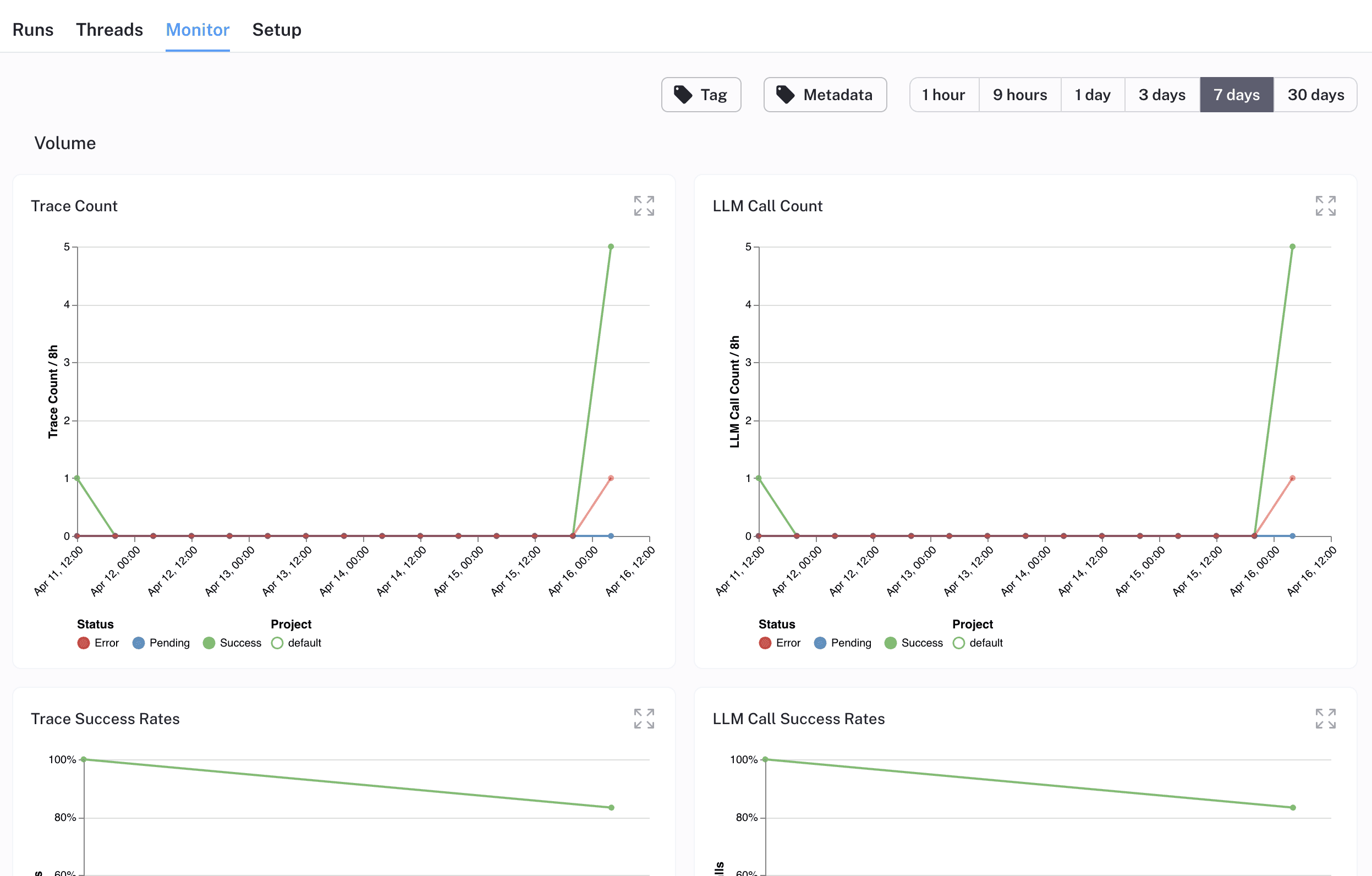

Lastly, you can monitor performance metrics regarding your agent in the Monitor tab. It shows metrics such as trace count, LLM call success rate, First time for token and much more.

LangSmith is a great choice of tool for those building agents and one that’s extremely simple to integrate. There is so much more functionality that we didn’t explore but its covers a lot of functionality in the application feedback loop of , collecting/annotating data → monitoring and then repeating

Deploy to Cerebrium

To deploy this application to Cerebrium you can simply run the command: cerebrium deploy in your terminal. Just make sure to delete the name == “main” code since that was just to run locally.

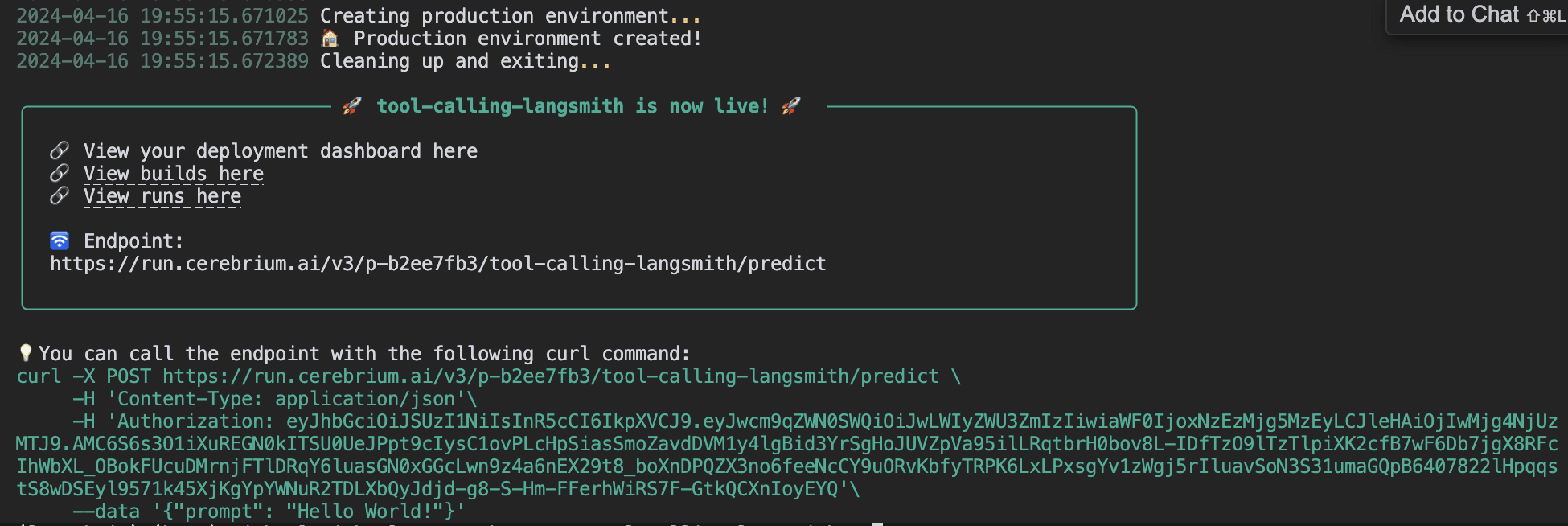

If it deployed successfully, you should see something like this:

You can now call this via an API endpoint and our agent will remember the conversation as long as the session id is the same. Cerebrium will automatically scale up your application based on demand and only pay for the compute you use.

You can find the final version of the code here.

Further improvements

In this tutorial I didn’t get to the following but I think it would be interesting to implement:

You can stream back the responses to the user to make the experience more seamless. LangChain makes it easy to do this.

Integrate with my email, that if I tag Claire in a thread, it can go through the conversation and get context to schedule the meeting.

Add voice capabilities so that someone can phone me and book a time and Claire can respond.

Conclusion

The integration of LangChain, LangSmith and Cerebrium make it extremely easy to deploy agents at scale! LangChain is a great frameworks for the orchestration of LLM’s, tooling, memory as well as LangSmith for monitoring this in production and using it to identify and iterate on edge cases. Cerebrium makes this agent scalable across across 100’s or 1000’s or CPU/GPU’s while only allowing you to pay for compute as its used.

Tag us as @cerebrimai in extensions you make to the code repository so we can share it with our community.