In this tutorial blog post, we will guide you through the process of implementing the TensorRT-LLM framework to serve Llama 3 8B model on the Cerebrium platform. TensorRT-LLM is a powerful framework that can be used to optimise machine learning models for inference. It can lead to significant improvements in performance, especially in terms of inference speed and throughput.

In this tutorial we will achieve ~1700 output tokens per second (FP8)on a single Nvidia A10 instance however you can go up to ~4500 output tokens per second on a single Nvidia A100 40GB instance or even ~19,000 tokens on a H100. For further improvements, you can use speculative sampling or FP8 quantisation to increase latency and throughput. You can view the official benchmarks across different GPU types, model sizes and input/output token lengths here.

Overview

TensorRT-LLM is a specialised library within NVIDIA's TensorRT, a high-performance deep learning inference platform. It is designed to accelerate large language models (LLMs) using NVIDIA GPUs. It can significantly improve the performance of your machine learning models however it comes at the expense of a very complicated setup process.

You are required to convert and build the model using very specific arguments that replicate your workloads as closely as possible. If you don’t configure these steps properly, you might witness subpar performance and subsequently it will become very complicated to deploy. We will cover these concepts in depth throughout the tutorial.

Cerebrium Setup

If you don’t have a Cerebrium account, you can create one by signing up here and following the documentation here to get setup

In your IDE, run the following command to create our Cerebrium starter project: cerebrium init llama-3b-tensorrt. This creates two files:

- Main.py - Our entrypoint file where our code lives

- cerebrium.toml - A configuration file that contains all our build and environment settings

TensorRT-LLM has a demo implementation of Llama on its Github repo which you can look at here. The first thing you will notice is that TensorRT-LLM requires Python 3.10. Subsequently, the code that converts the model weights to the TensorRT format requires a lot of memory and so we need to set this in our configuration file. Please change your cerebrium.toml file to reflect the below:

The most important decision to make is to decide what GPU chip you would like to run on. Larger models, longer sequence lengths and bigger batches all require more GPU memory and so if throughput is your desired metric, we recommend using a A100/H100. In this example we went with a A10 which gives a good cost/performance trade-off. Also, there is no capacity shortages and so its more stable for low-latency enterprise workloads. However, if this is a requirement for you - please reach out.

Let us then install the required pip and apt requirements. You can add the following to your cerebrium.toml

We want to install the tensorrt_llm package after the above installs and want to grab it from the Nvidia PyPI index url. To do this, we use shell commands which allows you to run command line arguments during the build process - this happens as the last step of the build process (ie: post pip, apt and conda installs).

Add the following under [cerebrium.build] in your cerebrium.toml:

We then need to write an initial code in our main.py that will:

- Download Llama 3 8B from HuggingFace

- Convert the model checkpoints

- Build the TensorTRT-LLM inference engine.

At the moment, Cerebrium does not have a way to run code only during the build process (work in progress) however, one easy way for us to side step this is to check if the file output from the trtllm-build step already exists meaning its been converted.

To start we need to go to HuggingFace and accept the model permissions for Llama 3 8B if we haven’t already. It takes about 30 minutes or less for them to accept your request. Since HuggingFace requires you to be authenticated to download the model weights, we need to authenticate ourselves in Cerebrium before downloading the model.

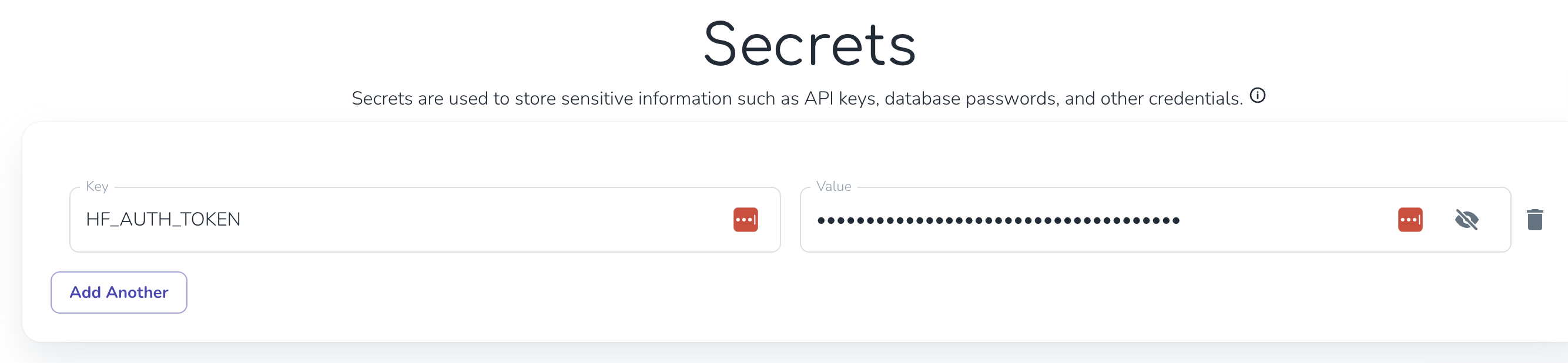

In your Cerebrium dashboard you can add your HuggingFace token as a secret by navigating to “Secrets” in the sidebar. For the sake of this tutorial I called mine “HF_AUTH_TOKEN”. We can now access these values in our code at runtime without exposing them in our code.

You can then add the following code to your main.py to download the model:

In the above code, we login to HuggingFace using our HF_AUTH_TOKEN and download the Llama 3 8B model. We check if the ENGINE_DIR exists as a way to prevent running this code on cold start but rather only running this if it the final TensorRT-LLM engine files don’t exist.

Setup TensorRT-LLM

Next, we need to convert the downloaded model. We can use the script that exists in the tensorRT-LLM repo. To download this script to your Cerebrium instance put the following code in your shell commands.

This downloads the specific script file to your instance. Shell commands works as an array of strings so you can just add it to the existing shell command already there.

Converting and Compiling TensorRT-LLM engine

Below we convert the model into float16 format, the reason being that it results in marginally higher performance over float32. You can go even further and use the quantised model (FP8) which will give you the lowest latency.

What allows TensorRT-LLM to achieve its high throughput is that it is compiled in advance to predefined settings which you set based on your expected workloads. This therefore makes concrete choices of the CUDA kernels to execute for each operation which are then optimized for specific types and shapes of tensors for the specific hardware it runs on.

So we need to specify the maximum input and output lengths as well as the typical batch size. The closer these values are to production, the higher our throughput will be. There are many different options you can pass to the command trtllm-build to tune the engine for your specific workload, we selected just two plugins that accelerate two core components. You can read more about the plugin options here.

We then need to run the convert_checkpoint script and then run the trtllm-build script in order to build the TensorTRT-LLM model. You can add the following code to your main.py:

You will see we run these command line arguments as a subprocess. The reason I did it like this and not as shell commands is:

- Currently Cerebrium doesn’t support Secrets in shell commands and I need the model to be downloaded before I can continue with the other model conversion steps.

- It seems much cleaner to reuse variables and use subprocesses than squash everything in the cerebrium.toml file.

Model Instantiation

Now that our model is converted with our specifications, let us initialise the model and set it up based on our requirements. This code will run on every cold start and takes roughly ~10-15s to load the model into GPU memory. If the container is warm, it will run your predict function immediately which we talk about in the next section.

Above your predict function, add the following code.

We need to pass the input that a user sends into our prompt template and cater for special tokens. Add the the following function that will handle this for us

Before we get to our predict function that runs at runtime we need to define our Pyandtic object that will make sure user requests conform to this standard as well as have default values.

Inference function

Lastly, let us bring this all together with our predict function

Now that our code is deployed, we can deploy the application with the command: cerebrium deploy.

On initial deploy, it will take about 15-20 minutes since besides installing all your packages and dependancies, it will download the model and convert it to the TensorRT-LLM format. Once completed, it should output a curl which you can copy and paste to test your inference endpoint.

TensorRT-LLM is one of the top performing inference frameworks on the market and especially if you know details about your expected future workloads. You should now have a low latency endpoint with high throughput that can auto-scale to tens of thousands of inferences all while only paying for the compute you use.

To view the final version of the code, you can look here.

.png)

.png)

.png)